A sybil attack is when a person creates many accounts or bots.

Spam is when you get unwanted messages.

The problem

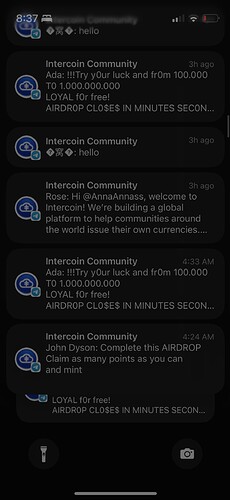

Look at our Intercoin Telegram channel, for instance - now accounts on telegram come in, post a message and immediately delete it. It must be a service. And Telegram allows it. This results in notifications until people mute our channel:

With ChatGPT it can become much worse because the bots won’t be so easy to spot, they’ll actually generate “thoughtful” text for a while until they amass trust / respect and then start gradually selling people on stuff. But even the ones that are easy to spot seem to be impossible to stop on Telegram — people will have to mute our channel because they can strike at any time!

In Qbix, how can we mitigate this situation?

PLEASE REPLY TO EACH POINT BELOW AFTER READING THE WHOLE THING. YOUR FEEDBACK WILL SHAPE HOW QBIX PLATFORM 3.0 HANDLES USERS. LET’S PUT OUR HEADS TOGETHER!

-

I think user accounts can sign up, but they shouldn’t be able to use any serious resources, — and community members’ attention is the most important resource. So, we can have small quota for each new user, to try things out per day: can’t store many files, can’t make too many posts, and can’t make too many requests etc, come back tomorrow… also makes a real user bookmark our app on their home screen, enable notifications, and become more loyal over time, instead of binging. Wordle did this, and it helped them go viral.

-

When users prove themselves by earning credits, eg by watching minutes of our video content, or getting their chats upvoted, they can do more. Upvotes must be zero-sum, so if someone upvotes you, they transferred their own credits to you, and no longer have those credits. The more credits a user has, the more visibility have in the community and their posts are promoted higher for other users (paying for advertising in a community).

-

Also they can sign up for recurring amount of credits to be deducted every week, to receive a membership. Or be invited by an admin for a free membership, or an admin can give them a free membership for a while. This means a role in a community. It means they are trusted in that community (like a blue checkmark on Twitter). There may be different levels of membership

-

Chats should be threaded (meaning a tree where a new chat can start as a reply to a message in another chat, like file folders

) , so you can mute one thread and still listen on others. Telegram chats are all-or-nothing, notifications on or off for whole chat… and honestly most of the messages end up being “hi everyone” and “welcome” in a linear chat. Useless. Better to have threads.

) , so you can mute one thread and still listen on others. Telegram chats are all-or-nothing, notifications on or off for whole chat… and honestly most of the messages end up being “hi everyone” and “welcome” in a linear chat. Useless. Better to have threads. -

In the beginning, when a community or topic/category is still new and isn’t getting much engagement, it may lower the requirements and allow anyone to post at least some content, for a reduced number of credits. But as its content reaches a normal level, it removes this “sponsorship discount” on credits. Maybe if gets too full, it increases the cost on credits. So this would be a cost scaling factor for how much that community or category is encouraging / discouraging new content. It can also be used to prevent flame wars, etc. on a thread and push it into subthreads, for those who still care.

-

Moderation. When someone is found to be disrupting a chat, a moderator (human or automatic) can increase their credits cost on an individual basis, or throttle them so they have to wait longer between messages. If they post helpful messages, the individual cost penalty would be reduced. Maybe helpful upvoted messages can actually result in the cost being lowered (ie encouraging that user to post more).

-

Members can invite guests, who can start to earn credits. But if you don’t have “member” label, then your profile or content won’t show up in searches unless the searching user explicitly indicates they want to see all the guests / crap.

-

Instead of just admins approving everyone, huge communities may set up hierarchies of membership. Admins can grant roles to moderators, moderators can grant roles to facilitators, facilitators can grant roles to members. Something like that.

-

An more complicated alternative to 8, is that we can track the weight of a branch of invites. We already track who invited whom, in the Streams table. We can start also storing weights of invites. To become a member, you need the invite of multiple people that have total N weight (let’s say N = 3 for communities). So the streams_invite and streams_invited tables can be used to know the users who endorsed your label of “member”. If a user is found to have been corrupted and giving out a lot of memberships, then the entire tree of invites is invalidated, meaning we lower the weight of all their invites — perhaps to zero (or even negative?) And then that may revoke role from users they invited who don’t have enough endorsement weight anymore, and they have to get it from some other admins / members. The people they invited may not be members anymore, so their invite weights go to zero also. Through this cascade, many people may lose membership, because this entire tree branch could be compromised. Real guests who want to become members would then seek

-

Every user can point to another N users to say they are “better than them” in a certain topic / interest / category. For example, someone us a better dancer or singer than me. If you get many endorsements like this, it is a reliable honest signal (hard to fake) that forms a Directed Acyclic Graph (DAG) — usually a tree. So the higher the people up there, the more weight their decisions can have, in anything that relates to that category. Even the weight of relation to the category itself can be determined by who is relating it: if I am a major singer and someone posts a link to a webpage about singing, on the “singing” interest category, then my upvote would add more weight to that relation, than a random person’s upvote.

-

It seems that weight is relative to a category / community, while “credits” currently are across all communities. If A invites B and B is a very helpful member, then A should get a “commission” for perpetuity from B, since they brought them. But this has to be zero-sum, so B actually gives eg 10% of all credits they earn or buy, to A. You can read more about a fully fleshed out idea here: https://qbix.com/Twitternomics.pdf

-

So, what do you think? The general principle is that the concern about whether content is posted by a bot or a human is actually a special case of a concern of whether someone is respected or not (respect), or of whether someone is producing quality content or not (merit). These are two separate questions — a bot can create a lot of quality content, but be unwanted because they are a bot. The content is managed by 2, 4, 5, 6 while the respect is managed by 3, 7, 8, 9, 10

Please, in your answer, number the points you are responding to.