The Need for Trust

People every day trust their devices, operating system and browser with their credentials and identity when it comes to accessing services online, whether it’s their bank, or their social platform. The bigger your bank balance, or the more followers you have on a platform, the greater the demand for security.

Apps like Signal and Telegram are run by billionaires who describe themselves as anarchists, and promise that their apps practice end-to-end encryption, with no back doors. Most people just take their word for it, when they send sensitive information. But even if it is true, it’s still problematic that they and their small development teams are our last line of defense against infiltration and corruption of their platforms. Their teams been approached by intelligence agencies to install back doors. Their centralized apps can also be banned from the stores. This is discussed in greater detail here:

Web3 solution

Web3 solves this problem by having open source code run by thousands of computers around the world. Back in 2017, we spun off a company called Intercoin which has raised money and built decentralized applications to manage governance, elections, payments, as well as high-value roles and permissions. We are integrating these applications into Qbix, so if a community amasses a lot of value and power, it can be distributed to individual decisions made by members of the community. People sign individual transactions, post them to the blockchain, smart contracts process them, and update the blockchain state. You may want to read more about security and usability issues with Web3 wallets, but on the whole, they have enabled a vastly more secure ecosystem than the current Web2 space.

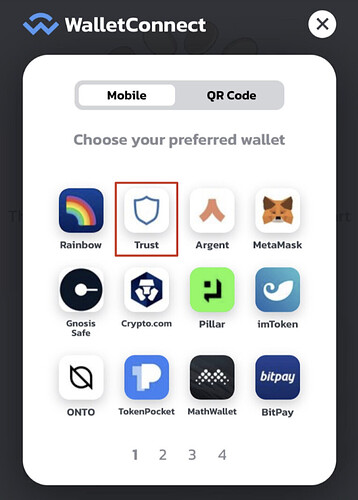

With Web3, users would do this with a “cryptocurrency wallet”. They’d have to download a wallet app or browser extension, and set it up, before they could be in a position to review and sign transactions. And even then, they’d have to click on the wallet in an interface like WalletConnect.

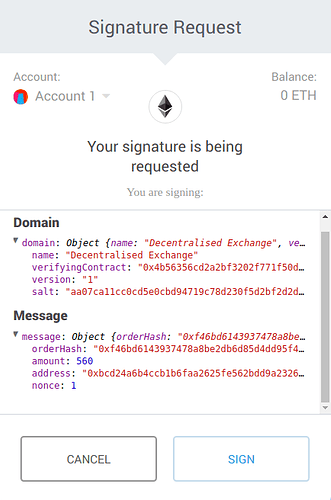

Then they’d review something like this and sign it:

This could be a lot of work, so companies like FortMatic (now called Magic) have wallets that make use of hardware security modules or trust Amazon Web Services for to manage the private keys. People end up trusting Amazon, but Qbix wants to build open alternatives to Big Tech that are neverthless secure on the open web. The arrangement described below accomplishes this.

Trusting Client Apps

Whatever you are trying to do, it would be great to know that the code you just downloaded or sideloaded does what people claim it does. When the back-end is not Web3, it’s hard to know what the web servers do remotely, although early solutions have emerged where you trust chipmakers like Intel. On the client side, the situation is much simpler: we want to know that our “user agents” like browsers and wallets won’t simple phone home the sensitive data we are entrusting to them.

We’ve written about the case for building client-first web apps. Our chief architect, Greg Magarshak, gave talks to developers about the many reasons to do so. Since 2012, he has been thinking and writing about identity and trust.

But it’s not enough to make web apps that run on the client without the server. We need to have a way for the user to be confident they can trust these apps. The browser, their user-agent, must have a way to guarantee this. Recently, browser makers have shipped features that do just that.

Hashes to the Rescue

Subresource integrity is a feature that websites can now use to make the browser ensure that the content loaded is exactly what was expected. Sites should use this whenever they load data from other sites, “even if it is Google”. Because why take a chance, when you don’t have to?

<script

src="https://example.com/example-framework.js"

integrity="sha384-oqVuAfXRKap7fdgcCY5uykM6+R9GqQ8K/uxy9rx7HNQlGYl1kPzQho1wx4JwY8wC"></script>

These hashes can be standardized and form the basis of a software directory that refers to various resources, whether they contain HTML, Javascript, images, or anything else. You can be sure that, whichever web server on the internet ends up sending that resource to your browser, it will match exactly what you want.

An HTML document may contain lots of elements referencing various resources, like <img> elements referencing images, <script> elements referencing Javascript files, and <iframe> elements referencing other HTML documents. If all of these elements have an integrity attribute, the document can be said to be predictable. We know what it will render and how it will behave, every time. The only variation can happen inside HTML documents rendered inside <frame> and <iframe> elements, but if those are also made predictable all the way down, then the entire document becomes completely predictable.

Whitelists of Audited Digital Content

Auditors around the world can review these resource hashes to make sure they are “safe”. They can then use their private keys to maintain signed whitelists of resources they vouch for. Some auditors may review images, video and audio files, and whitelist only the ones that they know are “safe for work”, or classify them into various topics or categories. Other auditors may review Javascript code intended to be added via <script> tags, and HTML files intended to be loaded inside <iframe> tags, to make sure that they are not doing things like sending your sensitive data to their own servers.

This is related to the concept of reproducible builds, but they are not required since Web browsers can simply apply integrity checks to the actual HTML and Javascript source code. The Web has been radically open source, as browsers have allowed any user to view the source of a website, scripts, or any other resources it loads. (In fact, this is what has enabled so many web developers to get their start in the industry).

In any case, auditors of HTML documents can be label them as completely predictable and safe. They can in turn be included (via <iframes> with the integrity attribute) in other HTML documents which are also labeled as completely predictable and safe. Thus, entire software packages, and Releases can be prepared, and auditors can officially add them to their signed whitelist.

Websites Loading Whitelisted Resources

Website owners can then require a resource hash to be on or more whitelist, before they include it from a free market of hosting companies. Since these hashed resources are static and never change, they can be heavily cached by the user’s browser (or other user agent). Occasionally, a server may want to require session cookies to and conditionally serve a resource. But most of the time, the resources are not just static, they’re publicly accessible to anyone who requests them. In that case, they can be cached at many levels, including CDNs and proxies (as long as they have the origin server’s https certificate).

Public and static resources can even be stored on IPFS and Hypercore, with multiple hosts agreeing to join a swarm and serve them. The Brave browser supports ipfs:// directly, while Beaker browser supports hyper://. If more browsers do this, then we won’t need to rely on https:// hosts anymore for these resources. As it is now, though, we still need to rely on IPFS gateways or run your own Hypercore gateway in order to load the resources via HTTP in all browsers. You can read more about IPFS and Hypercore and how they compare.

Qbix is also planning to build out an ecosystem around its platform that will support micropayments between sites for hosting and running digital apps by a free market of Qbix hosts (like those that host Wordpress, Magento, etc). While IPFS and Hypercore can be used for loading static content like files and images, Qbix micropayments are for running app back-ends (with PHP, Node.js and MySQL), as well as enabling a new economy for monetizing digital content without the need for paywalls and other annoying friction.

Trusted User Interfaces

Users may want to be confident that a certain <iframe> contains documents whose integrity hash is are on a whitelist they trust. Once they are confident, they can start reviewing and signing various payloads using the “completely trusted” software package inside the iframe.

Any Trusted User Interface (TUI) loaded in the iframe should make use of Intersection Observer 2.0 to make sure any buttons like Submit or Cancel aren’t being clickjacked. Web3 Wallets like FortMatic (Magic) should be doing the same thing.

Trusted Domains and Service Workers

Trusted Domains are intended to serve Trusted User Interfaces (TUI) as HTML webpages to be embedded in <iframe> elements, and nothing else. The integrity attribute would contain a hash that would be on a whitelist trusted by both the visiting user, and the top-level website with the <iframe> element.

The measures in the below paragraph are some of the necessary requirements in order for any competent Auditors to mark the TUI as trusted and put its hash on a whitelist.

The TUI loaded by a TD should run standard Javascript code to register a Service Worker with a fetch event handler that will intercept all subsequent requests to that domain. The handler will serve cached resources only, and will never hit the server, unless the cache is purged. Since service worker registration isn’t intercepted, no client-side Javascript will subsequently re-register the service worker once it’s installed. The only way to remove the service worker registration would be to go into Developer Tools, look for it and remove it, something only a power-user could do.

Service worker caches, and other Client-Side Storage can be deleted by the browser (e.g. Apple’s ITP deletes it when users don’t visit the TUI for 7 days) but in that case, the next time a TUI with a trusted hash loads and reinstalls its service worker, it’s safe since there are no secrets in client-side storage (localStorage, IndexedDB, etc.) to leak. The user simply re-authenticates. (Some browsers now support requestStorageAccess for more persistent storage.)

The Service Worker in some cases would lose its caches, or need to receive new resources it didn’t cache before. To do this, any site might load the TUI in an <iframe> and request via postMessage that the service worker see about downloading new trusted resources. The service worker would check whether these resources have been added to whitelists and signed by Auditors it trusts. If so, it may add these new resources to the Cache. In this way, new versions of TUI may be released for users who have long-running Service Workers that were launched before the new TUI was rolled out. The key is, though, that the TUI had to be reviewed by Auditors whose public keys are whitelisted by the Trusted Domain.

User Confidence

So websites can include resources they trust. But what about regular users? They are the ones who will be taking actions in their own name, such as posting comments, paying with tokens, and voting. They need to be confident that their statements aren’t being impersonated, their transactions aren’t being intercepted, and their decisions aren’t being faked.

One thing users can look for is for the Trusted User Interface (TUI) in the iframe to offer to autofill a password so the user would know that they are on the correct Trusted Domain. There is still a chance, however, remote, that the Trusted Domain could collude with the site they visit.

Qbix Browser Extension

Users can go for a simpler option that doesn’t require them to register and setting up their own home domain. They could simply install a browser extension from Qbix (or another vendor) which helps them manage which whitelists they trust, and works in the background to mark resources that have been loaded with hashes from this whitelist. The extension could work on Safari, Chrome, and Firefox, both mobile and desktop and the user can know which iframes to trust.

Extensions aren’t available everywhere, though: Chrome on Android doesn’t support extensions (but Kiwi browser does). Also, iOS WebViews don’t support Safari Web Extensions. That’s why there is a second option that works even on environments which don’t support browser extensions:

Home Domains

That’s why users may want to visit their own Home Domain (e.g. my-own-qbix-community.com) which would embed the TUI inside an <iframe> hosted from a TD. Now, to compromise the TUI, an attacker would have to hack both the TD as well as the chosen HD for each user they want to compromise. That could be a lot of servers, and why decentralized systems can be a lot more secure overall, than centralized ones.

Furthermore, users’ Home Domains would have a whitelist of Trusted Domains that they agree to be embedded in through <iframe> elements. That way, the user can visit any website, which can embed a User Interface from a Domain that purports to be safe, and wants to embed some information from the user’s Home Domain as a grandchild in an <iframe>. But the Home Domain would simply load something generic and refuse to trust the postMessage communication from that outer User Interface, since the origin was not in its whitelist of Trusted Domains. However, if the TUI is on a TD trusted by a user’s HD, the result looks like this:

- Any Website (it may host ways for us to create and collaborate on some content)

- Trusted Domain (I use this to authenticate)

- Home Domain (hosting my name, avatar, personal info, stuff I share privately)

- Trusted Domain (I use this to authenticate)

This solution works universally, without having to trust Amazon AWS and without browser extensions. On the other hand, a browser extension adds extra security and the user can choose to only use browsers that support it.

Authentication

When a user is convinced that they’ve loaded a Trusted User Interface, they can make great use of it. First of all, they can sign transactions to authenticate themselves to various sites, including the Qbix apps running on their own Home Domain. The full flow is like this:

- They visit a webpage on their Home Domain which has an

<iframe src="https://my-trusted-domain.com" integrity="sha256-{{some-whitelisted-hash}}"> - The

iframeelement loads the Trusted Domain, which is different from the Home Domain - (Once the environment is loaded, the Qbix Extension if installed, would mark it as a Trusted User Interface, and could display the whitelists on which its hash appears.)

- The Trusted Domain would query the user for a

usernamethat they can enter, if case the Home Domain has multiple users. - The Trusted User Interface would check that it’s not being clickjacked, and then prompt the user for a password (which can be autofilled by a password manager using e.g. biometrics).

- Then it would derive a

userKeyprivate-public key pair from'username@home.domain'andpassword. It would save only the public key inlocalStorage. - The TUI would derive a

sessionKeyand sign it with the private key ofuserKey, and then forget the privateuserKey. This signature (together with the publicuserKey) can form asessionCertificateto authenticate the session. - The

sessionKeycan then be used to sign all kinds of requests tohome.domain, which will be accompanied by thesessionKey.publicKeyas well as thesessionCertificate.

After authenticating with their Home Domain, the user can visit any websites, which might load Trusted User Interfaces from the Trusted Domain inside one or more <iframe> elements. The Trusted Domain in turn would remember the Home Domain and then load any interfaces from the Home Domain inside a grandchild <iframe>. The Home Domain would ascertain that the origin of the request is indeed coming from a whitelisted Trusted Domain, and then cooperate with it in good faith using postMessage and other means. (Some of this accomplishes what xAuth was designed to do over a decade ago.)

The user will be finally able to see their own name, friends, etc. displayed back to them. However, the websites the user visits will have no idea what their home domain is, because the Trusted Domain is guaranteed not to send this information anywhere (even its own web servers). The Trusted User Interface is pretty minimal, and can be audited by many parties. The apps and iframe widgets on the Home Domain do the rest.

A user would be able to read, write and admin information that they’ve been granted access to. For example, they’d be able to see avatars with names and photos for userIds of friends that have sent them this information.

Web app developers should be cautioned that apps running on the Home Domain should not return any information that be linked to a specific user (including userId, public picture, public user name) outside of an authenticated session with a user authorized to see it. Instead, send a random picture and name. That’s because this information can theoretically be scraped from the Home Domain by others who wish to track users across websites.

To give an example: Right now, Facebook returns app-scoped user IDs to each application, so they can’t use those to track users across apps. However, they can get the public profile picture and name of the user, and then scrape Facebook to try to find the name and picture of that user. Often, Google has already done the scraping for them, as Facebook allows GoogleBot to crawl and index its profiles. So it could actually be trivial to deanonymize users based on just their photo and name.

In the system described here, by contrast, users wishing to remain anonymous can register a Home Domain and the Trusted Domain will never reveal it, so the website operators won’t necessarily even know what domain to scrape.

(In fact, the Home Domain might actually be a native app that’s intercepting WebView requests, and not even corresponding to any server on the internet. In this case, however, a user would only be able to use this inside their phone, and not, say, on a computer in the library.)

We originally began architecting this system over a decade ago, and now it is possible to do with browsers. The situation is described here: